The effects of incentive systems in the academic world on the quality of science

Scientific transparency and appreciation: the key to creating a more sustainable and more objective research landscape

The academic world is characterised by a strict assessment system where researchers must have their achievements measured by certain criteria. But how exactly does this performance assessment work? What’s the deal with the notorious publish-or-perish culture? What bias effects are there besides confirmation bias and publication bias? In addition, questionable research practices such as p-hacking, HARKing or salami slicing raise questions about the credibility of scientific findings. Why is awareness of these aspects important and how does all this relate to Open Science?

Performance assessment and publish-or-perish culture

Performance assessment plays a central role in the academic world and is an essential part of researchers’ career development. The criteria by which their output is assessed can vary according to discipline, institution or country. But certain aspects are generally seen as indicators for successful achievements.

Publications are one of the most important currencies in the academic world. The number and quality of articles decide the progress of a career. In economics, articles in journals are regarded as one of the main indicators for performance and success. There is a hierarchy of so-called “top journals” or “A journals” that have different levels of reputation and relevance. The higher the number of a publication’s citations in a “top journal”, the better the publication is rated as a rule.

Next to publications, third-party funds are seen as an important indicator for achievement and success. The ability to acquire research projects and to receive grants from external funders – usually the German Research Foundation (DFG) – is rated as a sign of excellence and innovative strength. Research funding programmes, scholarships and institutional grants are often an essential element of academic career development.

Participating in international cooperations and building networks are also counted as performance criteria. Collaboration with other researchers and participation in international projects show that a person is capable of working in a global context and contributing to the international research community.

In addition, teaching, student mentoring, providing services for the scientific community, awards, honours and engagement in academic committees and associations can be seen as performance indicators, but they are less crucial in appointment procedures than publications. The culture of publish-or-perish is thus closely linked to performance assessment. It refers to the pressure to publish continuously in renowned journals for career advancement.

All in all, it is important to recognise the complexity of performance evaluation and the publish-or-perish culture. A more comprehensive view of the various aspects and a broader view of the diversity of scientific achievements can help create a more sustainable and balanced academic culture that emphasises quality and integrity.

Against this background the Coalition for Advancing Research Assessment (CoARA) has been established, an international coalition of organisations and experts whose main goal is to rethink the current system of research assessment and to develop alternative approaches that capture the quality and value of research in a more comprehensive way.

Awareness of bias effects and the role of Open Science

In order to ensure the integrity and quality of scientific research and to understand the rationale of Open Science, it is highly important to be aware of the different bias effects that can distort studies’ results. One fundamental bias effect is the confirmation bias, where people tend to prefer information that confirms their existing beliefs and to ignore or reject information contradicting these beliefs. This can lead to selective perception, interpretation and reporting, and impair the objectivity of the research. If researchers are aware of confirmation bias, they can actively take measures to minimise this effect, e.g. by using blind studies, pre-registration of hypotheses and disclosing data – which are typical Open Science practices.

Another relevant bias effect is publication bias, where only studies with significant or positive results are submitted and accepted for publication. This leads to a distortionary effect because non-significant or negative results are less visible. By promoting Open Science practices such as the publishing of null results and the pre-registering of studies, researchers can do their part in reducing such distortions and enabling a more complete picture of research results.

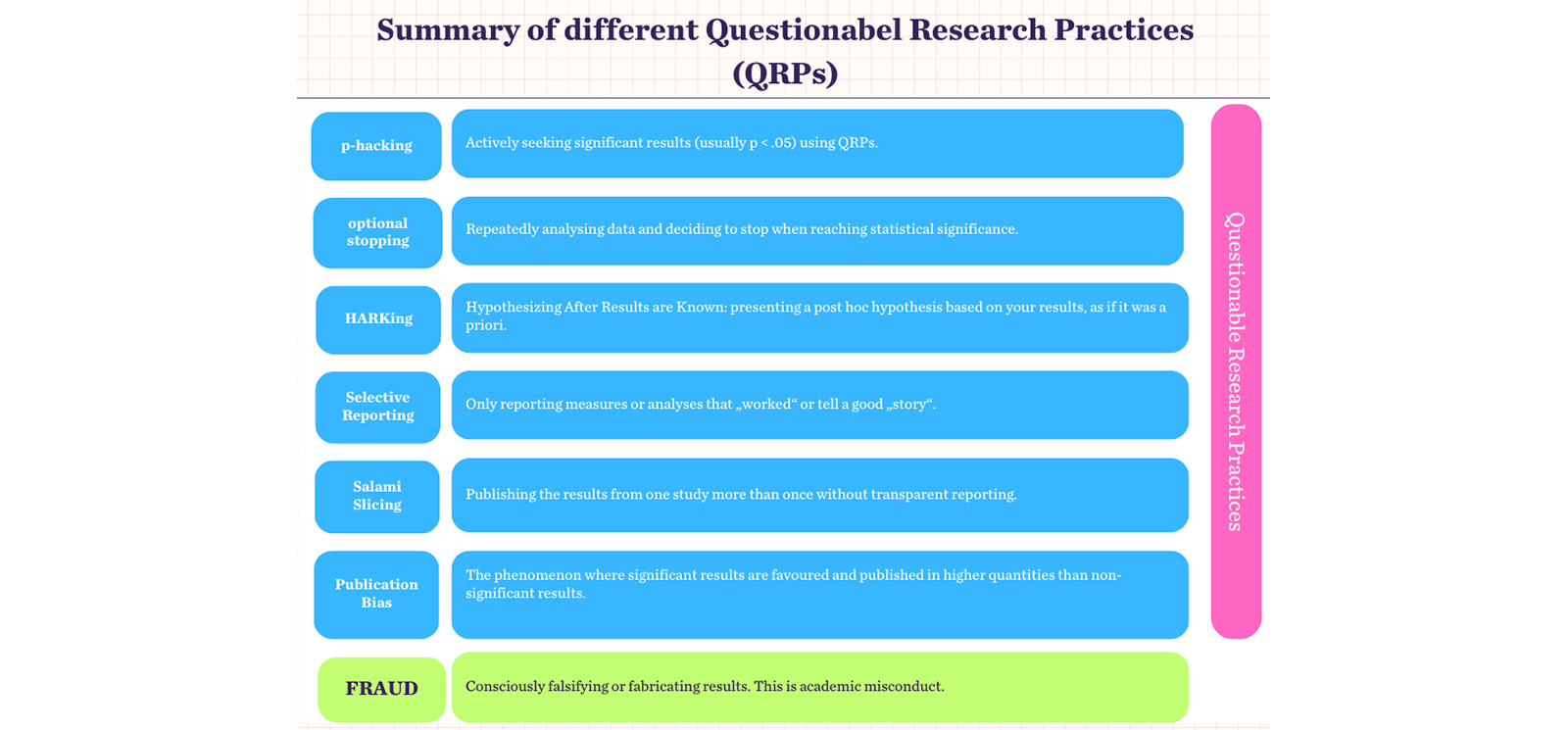

Other bias effects can also manifest themselves in research, such as the researcher’s urge to find consistent results (HARKing), where hypotheses are adjusted retroactively to the data to achieve significant results, or p-hacking, where researchers consciously or unconsciously manipulate statistical analyses to generate significant results. These questionable research practices can lead to non-reproducible results and a distortion of the research literature (see figure below). Sensitising for these effects and promoting transparency and openness can curb such practices.

(Source: Charlotte R. Pennington (2023): A Student´s Guide to Open Science.)

Awareness of the consequences of one-dimensional performance assessment, bias effects and questionable research practices is essential for the improvement of research quality and integrity. Promoting transparency, openness and a broader recognition of diverse research contributions are possible ways to steer science in a more sustainable and more objective direction.